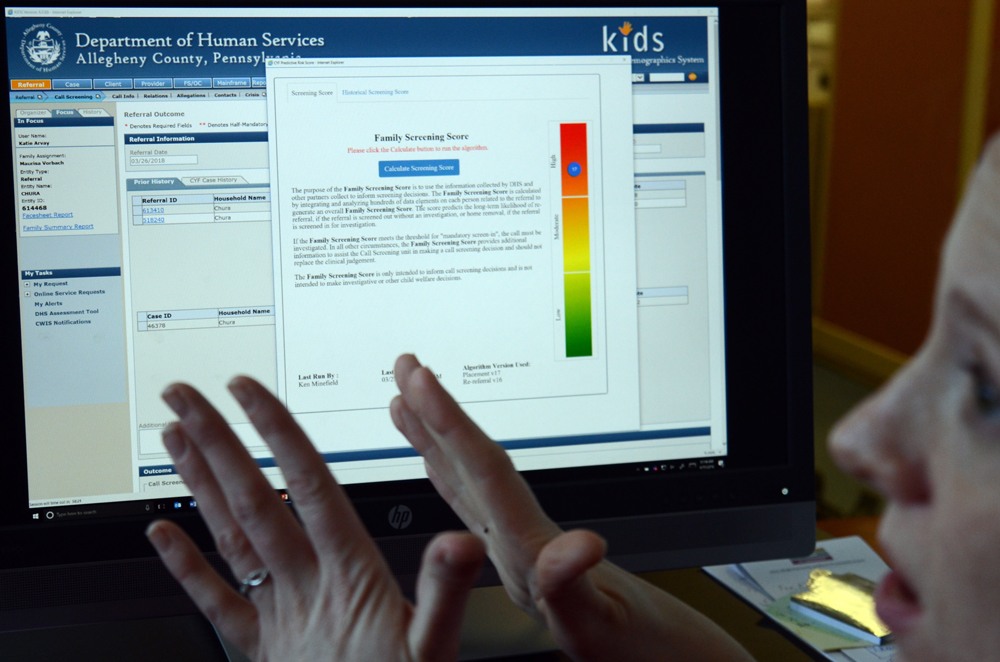

A new common sense has emerged regarding the perils of predictive algorithms. As the groundbreaking work of scholars like Safiya Noble, Cathy O’Neil, Virginia Eubanks, and Ruha Benjamin has shown, big data tools—from crime predictors in policing to risk predictors in finance—increasingly govern our lives in ways unaccountable and often unknown to the public. They replicate bias, entrench inequalities, and distort institutional aims. They devalue much of what makes us human: our capacities to exercise discretion, act spontaneously, and reason in ways that can’t be quantified. And far from being objective or neutral, technical decisions made in system design embed the values, aims, and interests of mostly white, mostly male technologists working in mostly profit-driven enterprises. Simply put, these tools are dangerous; in O’Neil’s words, they are “weapons of math destruction.”

These arguments offer an essential corrective to the algorithmic solutionism peddled by Big Tech—the breathless enthusiasm that promises, in the words of Silicon Valley venture capitalist Marc Andreessen, to “make everything we care about better.” But they have also helped to reinforce a profound skepticism of this technology as such. Are the political implications of algorithmic tools really so different from those of our decision-making systems of yore? If human systems already entrench inequality, replicate bias, and lack democratic legitimacy, might data-based algorithms offer some promise in addition to peril? If so, how should we approach the collective challenge of building better institutions, both human and machine?

“embed the values, aims, and interests of mostly white, mostly male technologists working in mostly profit-driven enterprises.”

This is the correct take, I think. Algorithms could (and can) produce much better outcomes than human driven systems. But when they’re wielded by capitalists, that’s not what they’re built to do. Humans wielded by capitalists suck, but they also (often) have compassion, conscience and some awareness of the outcomes they might be contributing to, and so they don’t always make decisions / take action exactly the way the capitalists would have them do so. Algorithms on the other hand do exactly what they’re told to do, exactly the way they’re told to do it, no matter who is telling them or why. A computer will NEVER have a problem sending children to death camps, if that’s what it’s programmed to do.